|

All times in PST (GMT-8), Feb. 26, 2024 |

| 9:00a |

Welcome Remarks: Matthew E. Taylor (University of Alberta) |

| 9:10a |

Invited Session 1 |

| 9:10-9:40a |

Maria Gini (University of Minnesota) - Topic: What would you do if you had 10, 1000, or 10000 robots?

Abstract:

If you had robots to do tasks, how many robots would you use? Does the answer depend on the tasks? Are the tasks independent of each other, so they can be done in parallel with no coordination,

or must some tasks be completed before others? Do the robots need a central controller to coordinate their work, or can each robot work independently? Who will decide which robot does what task? These are

central research questions that I will address in the talk.

|

| 9:40-10:10a |

Aaron Courville (Université de Montréal) - Topic: Q-value Shaping

Abstract:

In various real-world scenarios, interactions among agents often resemble the dynamics of general-sum games,

where each agent strives to optimize its own utility. Despite the ubiquitous relevance of such settings,

decentralized machine learning algorithms have struggled to find equilibria that maximize individual utility

while preserving social welfare. In this talk I will discuss our latest efforts in this direction by introducing Q-value Shaping,

a novel decentralized RL algorithm tailored to optimizing an agent's individual utility while fostering cooperation among adversaries in partially competitive environments.

we assume that during training, the opponent samples actions proportionally to their action-value function Q and we further assume that the agent has access to this Q function.

Experimental results demonstrate the effectiveness of our approach at achieving state-of-the-art performance in benchmark scenarios such as the Iterated Prisoner's Dilemma

and the Coin Game. We believe this method to be an important step toward training agent for practical multi-agent applications.

|

| 10:10-10:15a |

Q/A and Discussion for Invited Session 1 |

| 10:15a |

Contributed Papers - Oral Presentations

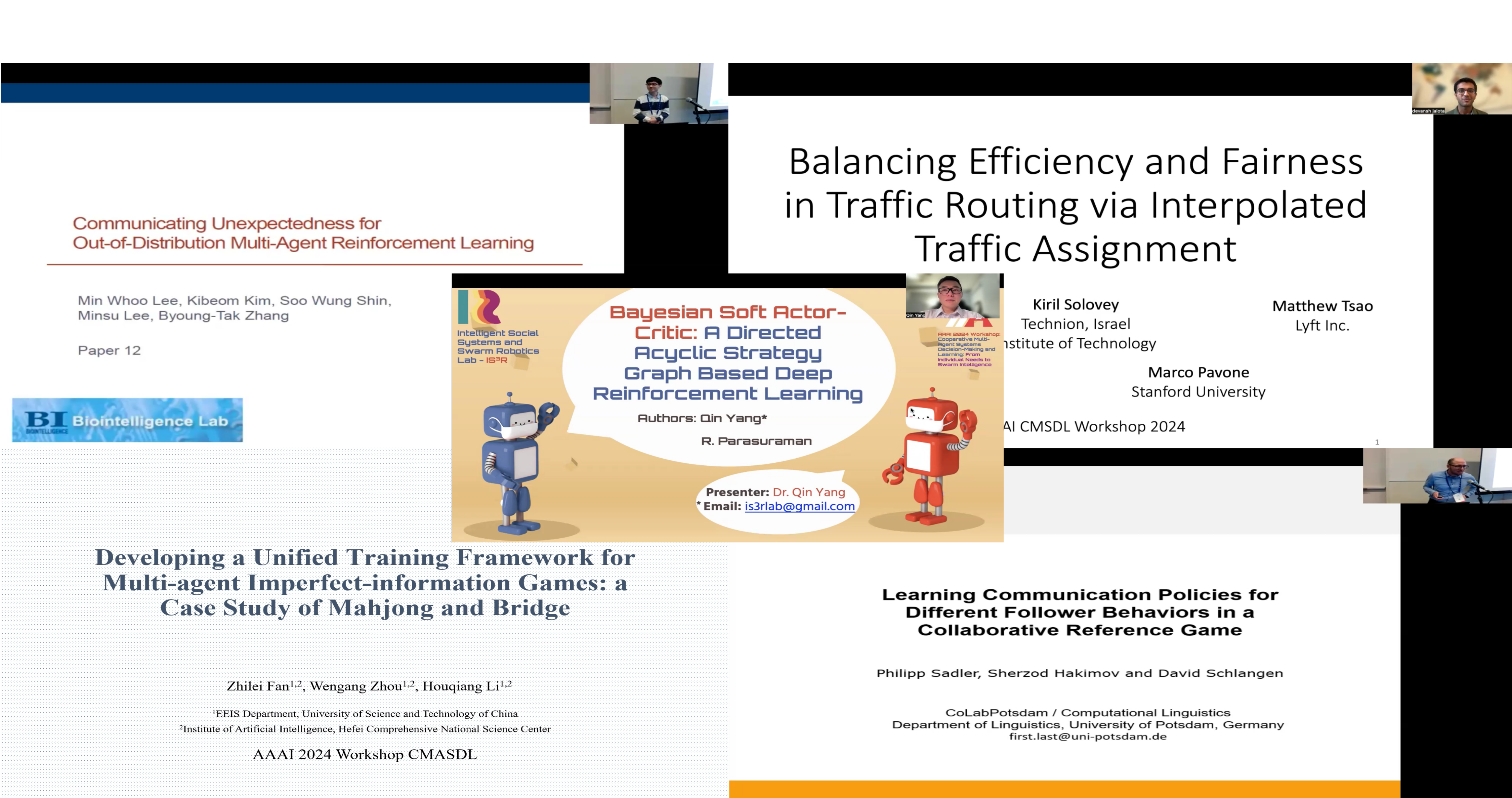

10:15-10:27a --- Communicating Unexpectedness for Out-of-Distribution Multi-Agent Reinforcement Learning

10:27-10:39a --- Balancing Fairness and Efficiency in Traffic Routing via Interpolated Traffic Assignment

10:39-10:51a --- Bayesian Soft Actor-Critic: A Directed Acyclic Strategy Graph Based Deep Reinforcement Learning

10:51-11:03a --- Learning Communication Policies for Different Follower Behaviors in a Collaborative Reference Game

11:03-11:15a --- Developing a Unified Training Framework for Multi-agent Imperfect-information Games: a Case Study of Mahjong and Bridge

|

| 11:15a |

Invited Session 2 |

| 11:15-11:45a |

Giovanni Beltrame (Polytechnique Montreal) - Topic: The role of hierarchy in multi-agent decision making

Abstract:

The emerging behaviors of swarms have fascinated scientists and gathered significant interest in the field of robotics.

While most robot swarms seen in literature are egalitarian (i.e. all robots have identical roles and capabilities), recent evidence suggest that

introducing hierarchy (i.e. some robots act as local decision makers) is essential to successfully deploy robot swarms in a wider range of practical applications.

While their abundance in nature hints that hierarchies may have certain advantages over egalitarian swarms, the conditions favoring hierarchies have not been empirically

demonstrated. We show evidence that egalitarian swarms perform well in environments that are comparable in size to the collective sensing capability of the swarm,

but will eventually fail as environments become larger or more complex. We show how hierarchies extend overall sensing capability of the swarm in a resource-effective manner,

succeeding in larger and less structured environments with fewer resources.

|

| 11:45-12:15p |

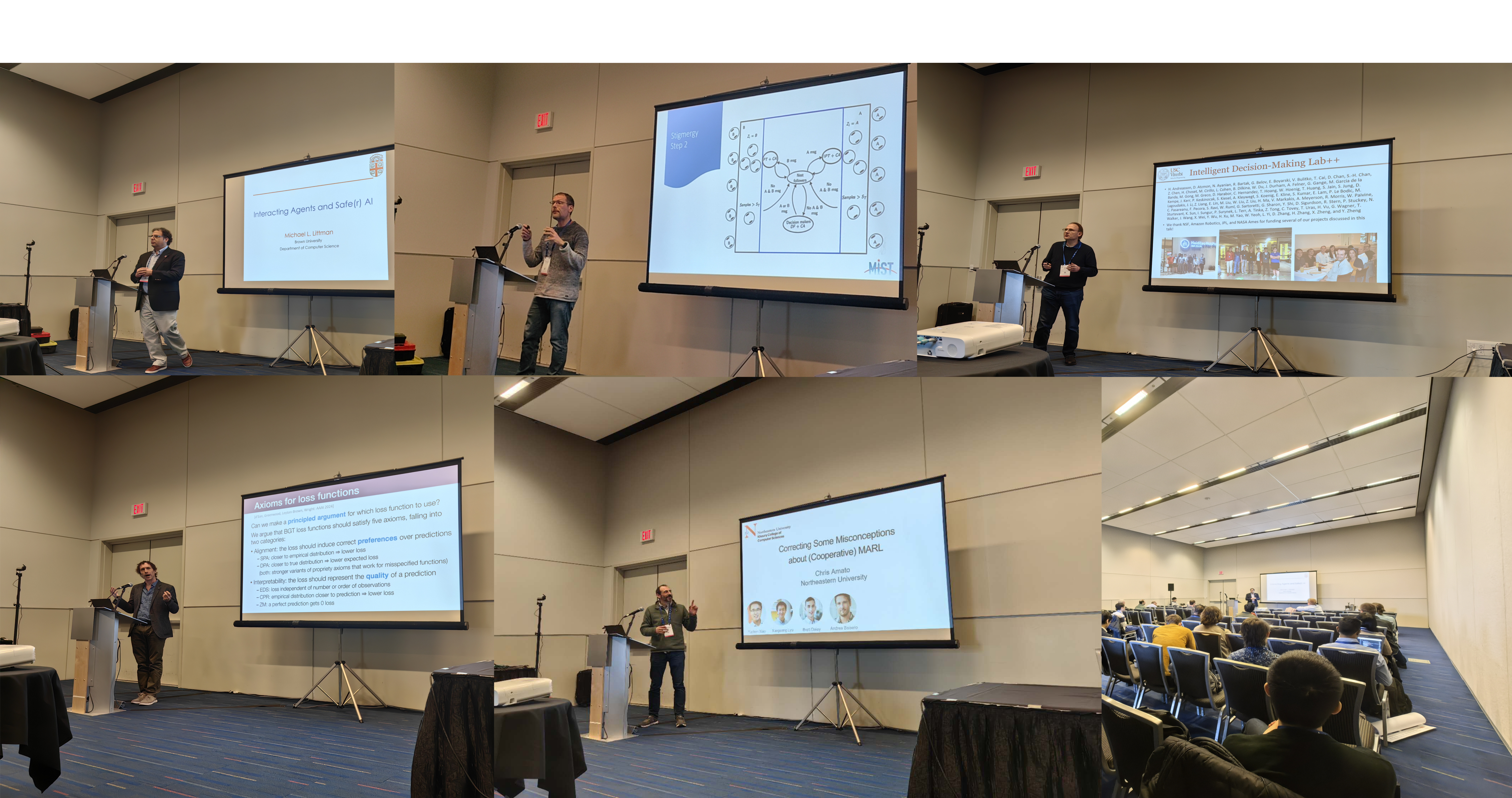

Michael L. Littman (Brown University) - Topic: Interacting Agents and Safe(r) AI

Abstract:

RL plays an important role in creating modern chatbots. This talk explores how some

of the current shortcomings in chatbot creation can be mitigated by taking a multiagent perspective

and proposes that considerably more human feedback is needed to create chatbots that would

generally be seen as "safe" and reliable.

|

| 12:15-12:20p |

Q/A and Discussion for Invited Session 2 |

| 12:20p |

Break |

| 2:00p |

Contributed Papers - Poster Session I

2:00-2:05p --- Cognitive Multi-agent Q-Learning for Cooperation in Competitive Environments

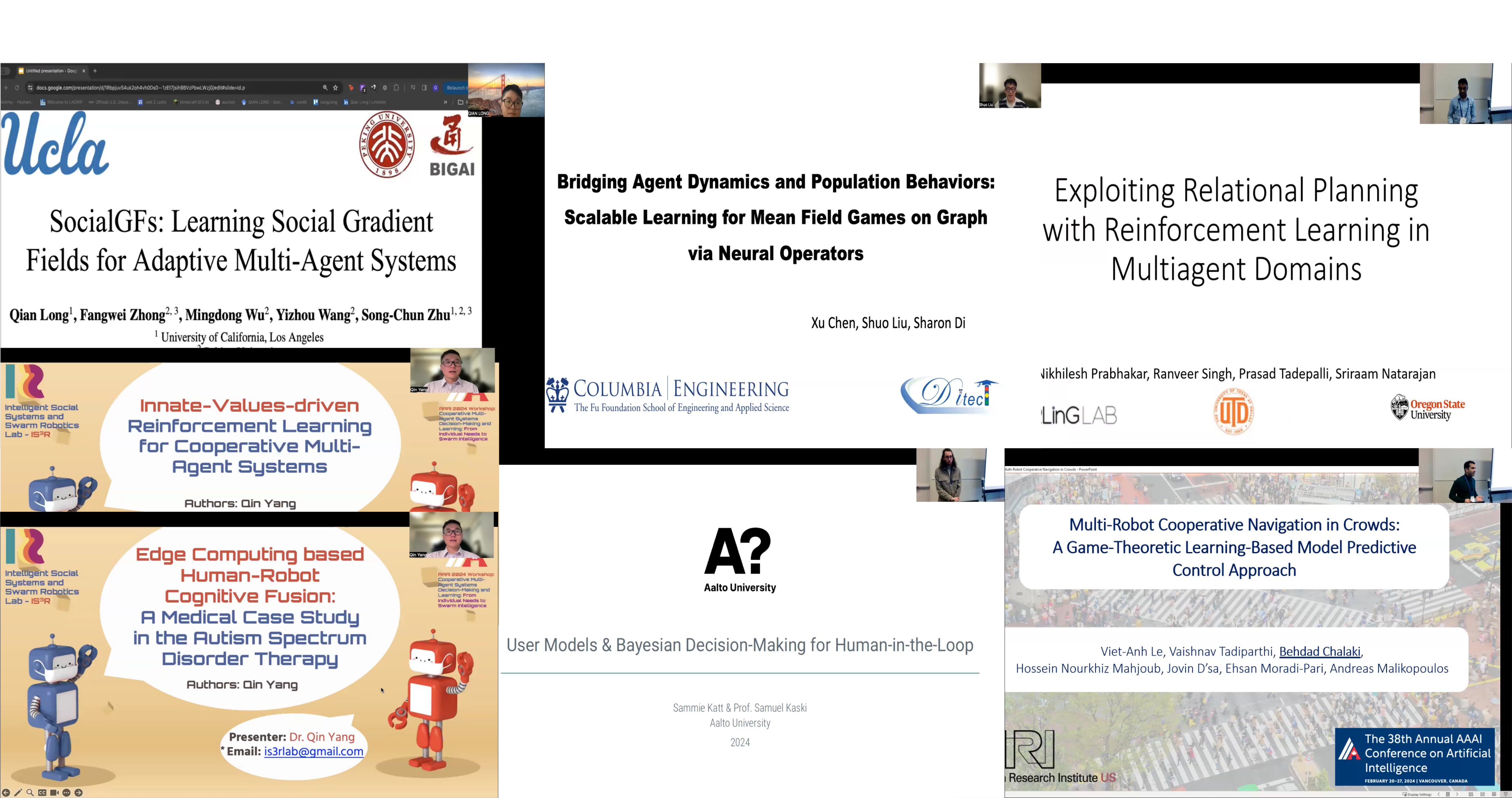

2:05-2:10p --- SocialGFs: Learning Social Gradient Fields for Multi-Agent Reinforcement Learning

2:10-2:15p --- Innate-Values-driven Reinforcement Learning for Cooperative Multi-Agent Systems

2:15-2:20p --- Bridging Agent Dynamics and Population Behaviors: Scalable Learning for Mean Field Games on Graph via Neural Operators

2:20-2:25p --- Edge Computing based Human-Robot Cognitive Fusion: A Medical Case Study in the Autism Spectrum Disorder Therapy

2:25-2:30p --- Q/A

|

| 2:30p |

Invited Session 3 |

| 2:30-3:00p |

Sven Koenig (USC) - Topic: Multi-Agent Pathfinding and Its Applications

Abstract:

The coordination of robots and other agents is becoming increasingly important for industry. For example, on the order of one thousand robots navigate autonomously

in Amazon fulfillment centers to move inventory pods all the way from their storage locations to the picking stations that need the products they store (and vice versa).

Optimal and, in some cases, even approximately optimal path planning for these robots is NP-hard, yet one must find high-quality collision-free paths for them in real-time.

Algorithms for such multi-agent path-finding problems had been studied in robotics and theoretical computer science for a long time but were insufficient since they are either

fast but result in insufficient solution quality or result in good solution quality but are too slow. In this talk, I will discuss different variants of multi-agent path-finding

problems and cool ideas for both solving them (in centralized and decentralized ways) and executing the resulting plans robustly. I will also discuss several applications of

the technology (funded by NSF and Amazon Robotics), including warehousing, manufacturing, and train scheduling.

|

| 3:00-3:30p |

Christopher Amato (Northeastern University) - Topic: Correcting Some Misconceptions about MARL

Abstract:

Multi-agent reinforcement learning (MARL) has exploded in popularity but there is a lack of understanding of when current methods work and what is the best way to learn in multi-agent settings.

This talk will include some of the fundamental challenges and misunderstandings of multi-agent reinforcement learning. In particular, it will discuss how 1) centralized critics are not strictly

better than decentralized critics in MARL (and can be worse), and 2) state-based critics are unsound and work well only in fully-observable multi-agent problems. Furthermore, it will discuss

related methods in value-based MARL.

|

| 3:30-3:35p |

Q/A and Discussion for Invited Session 3 |

| 3:35p |

Contributed Papers - Poster Session II

3:35-3:40p --- User Models and Bayesian Decision-Making for Human-in-the-Loop Problems

3:40-3:45p --- Multi-Robot Cooperative Navigation in Crowds: A Game-Theoretic Learning-Based Model Predictive Control Approach

3:45-3:50p --- Exploratory Training: When Annotators Learn About Data

3:50-3:55p --- Exploiting Relational Planning and Task-Specific Abstractions for Multiagent Reinforcement Learning in Relational Domains

3:55-4:00p --- Q/A

|

| 4:00p |

Invited Session 4 |

| 4:00-4:30p |

Marco Pavone (Stanford University) - Topic: Artificial Currency Based Government Welfare Programs: From Optimal Design to Audit Games

Abstract:

Artificial currencies have grown in prominence in many real-world resource allocation settings, helping alleviate fairness and equity concerns of traditional monetary mechanisms

that often favor users with higher incomes. In particular, artificial currencies have gained traction in government welfare programs that support eligible users in the population,

e.g., transit benefits programs provide eligible users with subsidized public transit. While such artificial currency based welfare programs are typically well-intentioned and offer

immense potential in improving the outcomes for the eligible group of users, the deployment of many such programs in practice is nascent; hence, the efficacy and optimal design of such

programs still needs to be formalized. Moreover, such programs are susceptible to several fraud mechanisms, with a notable concern being misreporting fraud, wherein users can misreport

their private attributes to gain access to more artificial currency (credits) than they are entitled to.

This talk introduces models and methods to study the equilibrium outcomes and the optimal design of such artificial currency based welfare programs to achieve particular societal objectives

of an administrator running the benefits program. Moreover, to address the issue of misreporting fraud, we propose a natural audit game, wherein the administrator can audit users at some cost

and levy fines against them for misreporting information. Methodologically, we propose a bi-level optimization framework to optimally design artificial currency based welfare mechanisms and

develop convex and linear programming approaches to compute the associated equilibrium outcomes. Finally, to highlight the practical viability of our proposed methods, we present case studies

in the context of two welfare programs: (i) San Mateo County’s Community Transport Benefits Program, wherein users are provided with travel credits to offset some of their payments for using

tolled express lanes on highways, and (ii) Washington D.C.’s federal transit benefits programs that provide subsidized public transit to federal employees.

|

| 4:30-5:10p |

Kevin Leyton-Brown (UBC) - Topic: Modeling Nonstrategic Human Play in Games

Abstract:

It is common to assume that players in a game will adopt Nash equilibrium strategies.

However, experimental studies have demonstrated that Nash equilibrium is often a poor description of human players' behavior,

even in unrepeated normal-form games. Nevertheless, human behavior in such settings is far from random. Drawing on data from real human play,

the field of behavioral game theory has developed a variety of models that aim to capture these patterns.

This talk will survey over a decade of work on this topic, built around the core idea of treating behavioral game theory as a machine learning problem.

It will touch on questions such as:

- Which human biases are most important to model in single-shot game theoretic settings?

- What loss function should be used to evaluate and fit behavioral models?

- What can be learned about examining the parameters of these models?

- How can richer models of nonstrategic play be leveraged to improve models of strategic agents?

- When does a description of nonstrategic behavior "cross the line" and deserve to be called strategic?

- How can advances in deep learning be used to yield stronger--albeit harder to interpret--models?

Finally, there has been much recent excitement about large language models such as GPT-4.

Time permitting, the talk will conclude by describing how the economic rationality of such models can be assessed and

presenting some initial experimental findings showing the extent to which these models replicate human-like cognitive biases.

|

| 5:10-5:15p |

Q/A and Discussion for Invited Session 4 |

| 5:15-5:20p |

Concluding Remarks |